Job execution (Hexagon): Difference between revisions

| (18 intermediate revisions by 3 users not shown) | |||

| Line 48: | Line 48: | ||

To submit a job use the '''sbatch''' command. | To submit a job use the '''sbatch''' command. | ||

sbatch job.sh # submit the script job. | sbatch job.sh # submit the script job.sh | ||

Queues and priorities are chosen automatically by the system. The command sabtch returns a job identifier (number) that can be used to monitor the status of the job (in the queue and during execution). This number may also be requested by the support staff. | Queues and priorities are chosen automatically by the system. The command sabtch returns a job identifier (number) that can be used to monitor the status of the job (in the queue and during execution). This number may also be requested by the support staff. | ||

| Line 104: | Line 104: | ||

=== srun and aprun=== | === srun and aprun=== | ||

On Hexagon user has two different way of starting executables in slurm script. user can start excutables with srun which | On Hexagon user has two different way of starting executables in slurm script. user can start excutables with srun which requires user to fine tune --ntaks and --ntaks-per-node prameters in sbatch script, forexamle : | ||

#!/bin/bash | #!/bin/bash | ||

#SBATCH --comment="MPI" | #SBATCH --comment="MPI" | ||

#SBATCH --ntasks= | #SBATCH --ntasks=32 | ||

#SBATCH --ntasks-per-node= | #SBATCH --ntasks-per-node=16 | ||

cd /work/user/jack | cd /work/user/jack | ||

srun ./mpitest | srun ./mpitest | ||

| Line 119: | Line 119: | ||

#SBATCH --time=00:10:00 | #SBATCH --time=00:10:00 | ||

#SBATCH --comment="MPI" | #SBATCH --comment="MPI" | ||

#SBATCH --ntasks= | #SBATCH --ntasks=32 | ||

cd /work/users/jack | cd /work/users/jack | ||

aprun - | aprun -n32 -N32 -m1000M ./mpitest | ||

=== APRUN arguments === | === APRUN arguments === | ||

The resources you requested in | The resources you requested in SBATCH has to match the arguments for aprun. So if you ask for "#SBATCH --mem=900mb" you will need to add the argument "-m 900M" to aprun. | ||

{| | {| | ||

| -B use parameters from batch-system (mppwidth,mppnppn,mppmem,mppdepth) | | -B use parameters from batch-system (mppwidth,mppnppn,mppmem,mppdepth) | ||

| Line 148: | Line 148: | ||

==== Sequential jobs ==== | ==== Sequential jobs ==== | ||

To use 1 processor (CPU) for at most 60 hours wall-clock time and all memory on 1 node (32000mb) the | To use 1 processor (CPU) for at most 60 hours wall-clock time and all memory on 1 node (32000mb) the SBATCH job script must contain the line: | ||

#SBATCH | #SBATCH --ntasks=1 | ||

#SBATCH --time=60:00:00 | #SBATCH --time=60:00:00 | ||

Below is a complete example of a | |||

Below is a complete example of a Slurm script for executing a sequential job. | |||

#!/bin/bash | #!/bin/bash | ||

#SBATCH -J "seqjob" | #SBATCH -J "seqjob" #Give the job a name (optional) | ||

#SBATCH -A | #SBATCH -A CPUaccount #Specify the project the job should be accounted on (obligatory) | ||

#SBATCH -n 1 | #SBATCH -n 1 # one core needed | ||

#SBATCH -t 60:00:00 | #SBATCH -t 60:00:00 #run time in hh:mm:ss | ||

#SBATCH --mail-type=ALL # Mail events (NONE, BEGIN, END, FAIL, ALL) | #SBATCH --mail-type=ALL # Mail events (NONE, BEGIN, END, FAIL, ALL) | ||

#SBATCH --mail-user=forexample@uib.no | #SBATCH --mail-user=forexample@uib.no # email to user | ||

#SBATCH --output=serial_test_%j.out | #SBATCH --output=serial_test_%j.out #Standard output and error log | ||

cd /work/$USER/ | cd /work/users/$USER/ # Make sure I am in the correct directory | ||

aprun - | aprun -n 1 -N 1 -m 32000M ./program | ||

==== Parallel/MPI jobs ==== | ==== Parallel/MPI jobs ==== | ||

To use 512 CPUs (cores) for at most 60 hours wall-clock time, below is an example: | To use 512 CPUs (cores) for at most 60 hours wall-clock time, below is an example: | ||

#!/bin/bash | #!/bin/bash | ||

#SBATCH -J "mpijob" | #SBATCH -J "mpijob" # Give the job a name | ||

#SBATCH -n 512 | #SBATCH -n 512 # we need 512 cores | ||

#SBATCH -t 60:00:00 # time needed | #SBATCH -t 60:00:00 # time needed | ||

#SBATCH --mail-type=ALL # Mail events (NONE, BEGIN, END, FAIL, ALL) | #SBATCH --mail-type=ALL # Mail events (NONE, BEGIN, END, FAIL, ALL) | ||

#SBATCH --mail-user=forexample@uib.no # email to user | #SBATCH --mail-user=forexample@uib.no # email to user | ||

#SBATCH --output=serial_test_%j.out #Standard output and error log | #SBATCH --output=serial_test_%j.out #Standard output and error log | ||

cd /work/$USER/ | cd /work/users/$USER/ | ||

aprun -B ./program | aprun -B ./program | ||

| Line 182: | Line 182: | ||

=== Creating dependencies between jobs === | === Creating dependencies between jobs === | ||

Documentation is coming soon. | |||

=== Combining multiple tasks in a single job === | === Combining multiple tasks in a single job === | ||

| Line 226: | Line 199: | ||

== Interactive job submission == | == Interactive job submission == | ||

Documentation is coming soon. | |||

srun --pty bash -i | |||

Note that you will be charged for the full time this job allocates the CPUs/nodes, even if you are not actively using these resources. Therefore, exit the job (shell) as soon as the interactive work is done. To launch your program on the compute node you go to /work/users/$USER and then you HAVE to use "aprun". If "aprun" is omitted the program is executed on the login node, which in the worst case can crash the login node. Since /home is not mounted on the compute node, the job has to be started from /work/users/$USER. | |||

Note that you will be charged for the full time this job allocates the CPUs/nodes, even if you are not actively using these resources. Therefore, exit the job (shell) as soon as the interactive work is done. To launch your program on the compute node you go to /work/$USER and then you HAVE to use "aprun". If "aprun" is omitted the program is executed on the login node, which in the worst case can crash the login node. Since /home is not mounted on the compute node, the job has to be started from /work/$USER. | |||

== General job limitations == | == General job limitations == | ||

Documentation is coming soon. | |||

Default CPU and job maximums may be changed by sending an application to [[Support]]. | Default CPU and job maximums may be changed by sending an application to [[Support]]. | ||

== Recommended environment variable settings == | == Recommended environment variable settings == | ||

Documentation is coming soon. | |||

Sometimes there can be the problem with proper export of module functions, if you get ''module: command not found'', try to add into your job script: | Sometimes there can be the problem with proper export of module functions, if you get ''module: command not found'', try to add into your job script: | ||

export -f module | export -f module | ||

If you still can get module functions in your job script try to add this: | If you still can't get module functions in your job script try to add this: | ||

source ${MODULESHOME}/init/REPLACE_WITH_YOUR_SHELL_NAME | source ${MODULESHOME}/init/REPLACE_WITH_YOUR_SHELL_NAME | ||

# ksh example: | # ksh example: | ||

| Line 277: | Line 226: | ||

Redirect output of running application to the /work file system. See: [[Data (Hexagon)#Disk quota and accounting]]: | Redirect output of running application to the /work file system. See: [[Data (Hexagon)#Disk quota and accounting]]: | ||

aprun .... >& /work/$USER/combined.out | aprun .... >& /work/users/$USER/combined.out | ||

aprun .... >/work/$USER/app.out 2>/work/$USER/app.err | aprun .... >/work/users/$USER/app.out 2>/work/users/$USER/app.err | ||

aprun .... >/work/$USER/app.out 2>/dev/null | aprun .... >/work/users/$USER/app.out 2>/dev/null | ||

== Scheduling policy on the machine == | == Scheduling policy on the machine == | ||

| Line 285: | Line 234: | ||

=== Types of jobs that are prioritized === | === Types of jobs that are prioritized === | ||

Documentation is coming soon. | |||

=== Types of jobs that are discouraged === | === Types of jobs that are discouraged === | ||

Documentation is coming soon. | |||

=== Types of jobs that are not allowed (will be rejected or never start) === | === Types of jobs that are not allowed (will be rejected or never start) === | ||

Documentation is coming soon. | |||

== CPU-hour quota and accounting == | == CPU-hour quota and accounting == | ||

Documentation is coming soon. | |||

=== How to list quota and usage per user and per project === | === How to list quota and usage per user and per project === | ||

Documentation is coming soon. | |||

== FAQ / trouble shooting == | == FAQ / trouble shooting == | ||

Please refer to our general [[FAQ (Hexagon)]] | Please refer to our general [[FAQ (Hexagon)]] | ||

[[Category:Hexagon]] | [[Category:Hexagon]] | ||

Latest revision as of 13:07, 21 June 2018

Batch system

To ensure a fair use of the clusters, all users have to run their computations via the batch system. A batch system is a program that manages the queuing, scheduling, starting and stopping of jobs/programs users run on the cluster. Usually it is divided into a resource-manager part and a scheduler part. To start jobs, users specify to the batch system which executable(s) they want to run, the amount of processors and memory needed, and the maximum amount of time the execution should take.

Hexagon uses "SLURM" as the resource manager. In addition hexagon uses aprun to execute jobs on the compute nodes, independent of the job being a MPI job or sequential job. The user therefore has to make sure to call "aprun ./executable", or "srun ./excutable"and not just the executable if it is to run on the compute part instead of the login-node part of the Cray.

Node configuration

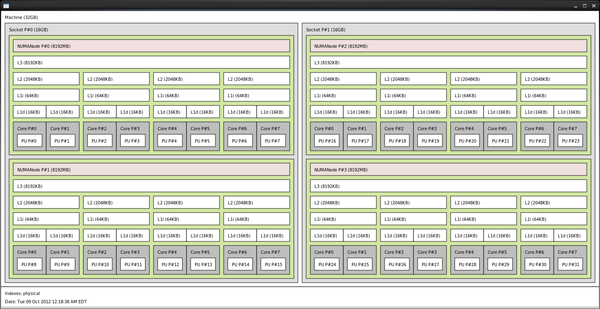

Each Hexagon node has the following configuration:

- 2 x 16 cores Interlagos CPUs

- 32 GB of RAM

For core to memory allocation please refer to the following illustration:

NOTE: There is only one job can run on each of the nodes (nodes are dedicated). Therefore, for better node utilization, please try to specify in the job as few limitations as possible and leave the rest to be decided by the batch system.

Batch job submission

There are essentially two ways to execute jobs via the batch system.

- Interactive. The batch system allocates the requested resources or waits until these are available. Once the resources are allocated, interaction with these resources and your application is via the command-line and very similar to what you normally would do on your local (Linux) desktop. Note that you will be charged for the entire time your interactive session is open, not just during the time your application is running.

- Batch. One writes a job script that specifies the required resources and executables and arguments. This script is then given to the batch system that will then schedule this job and start it as soon as the resources are available.

Running jobs in batch is the more common way on a compute cluster. Here, one can e.g. log off and log on again later to see what the status of a job is. We recommend running jobs in batch mode.

Create a job (scripts)

Jobs are normally submitted to the batch system via shell scripts, and are often called job scripts or batch scripts. Lines in the scripts that start with #SBATCH are interpreted by SLURM as instructions for the batch system. (Please note that these lines are interpreted as comments when the script is run in the shell, so there is no magic here: a batch script is a shell script.)

Script can be created in any text editor, like e.g. vim and emacs.

Job script should start with an interpreter line, like:

#!/bin/bash

Next it should contain directives to queue system, at least execution time and how many cpus are requested:

#SBATCH --time=30:00 #SBATCH --ntasks=64

The rest is the regular shell commands.Please note: all other #SBATCH directives will be ignored after first regular command.

All commands written in script will be executed on login node. This is important to remember for several reasons:

- Commands like gzip/bzip2 or even cp for many files can create heavy load on CPU and network interface. This will result in low or unstable performance for such operations.

- Overuse of memory or CPU resources on login node can crash it. This means all jobs (from all users) which were started from that login node will crash.

Taking this in mind all IO/CPU intensive tasks should be prefixed with aprun command. aprun will execute the command on compute nodes resulting in higher performance. Note that this should improve the charging of the job since the total time the script is running should be less (charging does not take into account whether the compute nodes are used or not during the time the script is run).

Real computational tasks (the main program) should of course be prefixed with aprun as well.

You can find examples below.

Manage a job (submission, monitoring, suspend/resume, canceling)

Please find below the most important batch system job management commands:

To submit a job use the sbatch command.

sbatch job.sh # submit the script job.sh

Queues and priorities are chosen automatically by the system. The command sabtch returns a job identifier (number) that can be used to monitor the status of the job (in the queue and during execution). This number may also be requested by the support staff.

sinfo - reports the state of partitions and nodes.

squeue - reports the state of jobs or job steps.

sbatch - submit a job script for later execution.

scancel - cancel a pending or running job or job step

srun - submit a job for execution or initiate job steps in real time

apstat - Provides status information for Cray XT systems applications

xtnodestat Shows information about compute and service partition processors and the jobs running in each partition

For more information regarding to slurm command please check man page.

General commands

Get documentation on a command:

man sbatch man squeue

Information on jobs

List all current jobs for a user:

squeue -u <username>

List all running jobs for a user:

squeue -u <username> -t RUNNING

List all pending jobs for a user:

squeue -u <username> -t PENDING

List detailed information for a job:

scontrol show jobid <jobid> -dd

List status info for a currently running job:

sstat --format=AveCPU,AvePages,AveRSS,AveVMSize,JobID -j <jobid> --allsteps

When your job is competed you get more information includes run time, memory used, etc. To get statistics on completed jobs:

sacct -j <jobid> --format=JobID,JobName,MaxRSS,Elapsed

To view the same information for all jobs of a user:

sacct -u <username> --format=JobID,JobName,MaxRSS,Elapsed

Controlling jobs

To cancel one job:

scancel <jobid>

To temporarily hold job:

scontrol hold <jobid>

Then you can resume it by:

scontrol resume <jobid>

List of useful job script parameters

-A : a job script must specify a valid project name for accounting, otherwise it will not be possible to submit jobs to the batch system. -t: a job script must specify proper time that job needs , otherwise it will run for 15 minutes which is default.

For additional sbatch switches please refer to:

man sbatch

srun and aprun

On Hexagon user has two different way of starting executables in slurm script. user can start excutables with srun which requires user to fine tune --ntaks and --ntaks-per-node prameters in sbatch script, forexamle :

#!/bin/bash #SBATCH --comment="MPI" #SBATCH --ntasks=32 #SBATCH --ntasks-per-node=16 cd /work/user/jack srun ./mpitest

And user can also start excutables with aprun which user only has to specify total number of cores user need with ntasks, then can use aprun to fine tune rest of the parameters.

#!/bin/bash #SBATCH --account=vd #SBATCH --time=00:10:00 #SBATCH --comment="MPI" #SBATCH --ntasks=32 cd /work/users/jack aprun -n32 -N32 -m1000M ./mpitest

APRUN arguments

The resources you requested in SBATCH has to match the arguments for aprun. So if you ask for "#SBATCH --mem=900mb" you will need to add the argument "-m 900M" to aprun.

| -B use parameters from batch-system (mppwidth,mppnppn,mppmem,mppdepth) | |

| -N processors per node | should be equal to the value of mppnppn |

| -n processing elements | should be equal to the value of mppwidth |

| -d number of threads | should be equal to the value of mppdepth |

| -m memory per element suffix | should be equal to the amount of memory requested by mppmem. Suffix should be M. |

A complete list of aprun arguments can be found on the man page of aprun.

List of classes/queues, incl. short description and limitations

Hexagon uses a default batch queue named "hpc". It is a routing queue which based on job attributes can forward jobs to the debug, small or normal queues. Therefore there is no need to specify any execution queue in the sbatch script.

Please keep in mind that we have priority based job scheduling. This means that based on requested amount of CPU and time job, as well as previous usage history, jobs will get higher or lower priority in the queue. Please find a more detailed explanation in Job execution (Hexagon)#Scheduling policy on the machine.

Relevant examples

We illustrate the use of sbatch job scripts and submission with a few examples.

Sequential jobs

To use 1 processor (CPU) for at most 60 hours wall-clock time and all memory on 1 node (32000mb) the SBATCH job script must contain the line:

#SBATCH --ntasks=1 #SBATCH --time=60:00:00

Below is a complete example of a Slurm script for executing a sequential job.

#!/bin/bash #SBATCH -J "seqjob" #Give the job a name (optional) #SBATCH -A CPUaccount #Specify the project the job should be accounted on (obligatory) #SBATCH -n 1 # one core needed #SBATCH -t 60:00:00 #run time in hh:mm:ss #SBATCH --mail-type=ALL # Mail events (NONE, BEGIN, END, FAIL, ALL) #SBATCH --mail-user=forexample@uib.no # email to user #SBATCH --output=serial_test_%j.out #Standard output and error log cd /work/users/$USER/ # Make sure I am in the correct directory aprun -n 1 -N 1 -m 32000M ./program

Parallel/MPI jobs

To use 512 CPUs (cores) for at most 60 hours wall-clock time, below is an example:

#!/bin/bash #SBATCH -J "mpijob" # Give the job a name #SBATCH -n 512 # we need 512 cores #SBATCH -t 60:00:00 # time needed #SBATCH --mail-type=ALL # Mail events (NONE, BEGIN, END, FAIL, ALL) #SBATCH --mail-user=forexample@uib.no # email to user #SBATCH --output=serial_test_%j.out #Standard output and error log cd /work/users/$USER/ aprun -B ./program

Please refer to the Job execution (Hexagon)#Parallel/OpenMP jobs paragraph IMPORTANT statements.

Creating dependencies between jobs

Documentation is coming soon.

Combining multiple tasks in a single job

In some cases it is preferable to combine several aprun's inside one batch script. This can be useful in the following cases:

- Several executions must be started one after each other with same amount of CPUs (better use of resources for this can be to use dependencies in your qsub script).

- Runtime of each aprun is shorter than e.g. one minute. By combining several of these short tasks together you avoid that the job will spend more time waiting in the queue and starting up than being executed.

It should be written like this in the script:

aprun -B ./cmd args aprun -B ./cmd args ...

Interactive job submission

Documentation is coming soon.

srun --pty bash -i

Note that you will be charged for the full time this job allocates the CPUs/nodes, even if you are not actively using these resources. Therefore, exit the job (shell) as soon as the interactive work is done. To launch your program on the compute node you go to /work/users/$USER and then you HAVE to use "aprun". If "aprun" is omitted the program is executed on the login node, which in the worst case can crash the login node. Since /home is not mounted on the compute node, the job has to be started from /work/users/$USER.

General job limitations

Documentation is coming soon.

Default CPU and job maximums may be changed by sending an application to Support.

Recommended environment variable settings

Documentation is coming soon.

Sometimes there can be the problem with proper export of module functions, if you get module: command not found, try to add into your job script:

export -f module

If you still can't get module functions in your job script try to add this:

source ${MODULESHOME}/init/REPLACE_WITH_YOUR_SHELL_NAME

# ksh example:

# source ${MODULESHOME}/init/ksh

MPI on hexagon is highly tuneable. Sometimes you can receive messages that some MPI variables have to be adjusted. In this case just add the recommended export line into you job script on a line before the aprun command. Normally recommended messages are quite verbose. For example (bash syntax):

export MPICH_UNEX_BUFFER_SIZE=90000000

Redirect output of running application to the /work file system. See: Data (Hexagon)#Disk quota and accounting:

aprun .... >& /work/users/$USER/combined.out aprun .... >/work/users/$USER/app.out 2>/work/users/$USER/app.err aprun .... >/work/users/$USER/app.out 2>/dev/null

Scheduling policy on the machine

Scheduler on Hexagon has fairshare setup in place. This ensures that all users will get adjusted priorities, based on initial and historical data from running jobs. Please check Queue priorities (Hexagon) for better understanding of the queuing system on Hexagon

Types of jobs that are prioritized

Documentation is coming soon.

Types of jobs that are discouraged

Documentation is coming soon.

Types of jobs that are not allowed (will be rejected or never start)

Documentation is coming soon.

CPU-hour quota and accounting

Documentation is coming soon.

How to list quota and usage per user and per project

Documentation is coming soon.

FAQ / trouble shooting

Please refer to our general FAQ (Hexagon)